Introduction

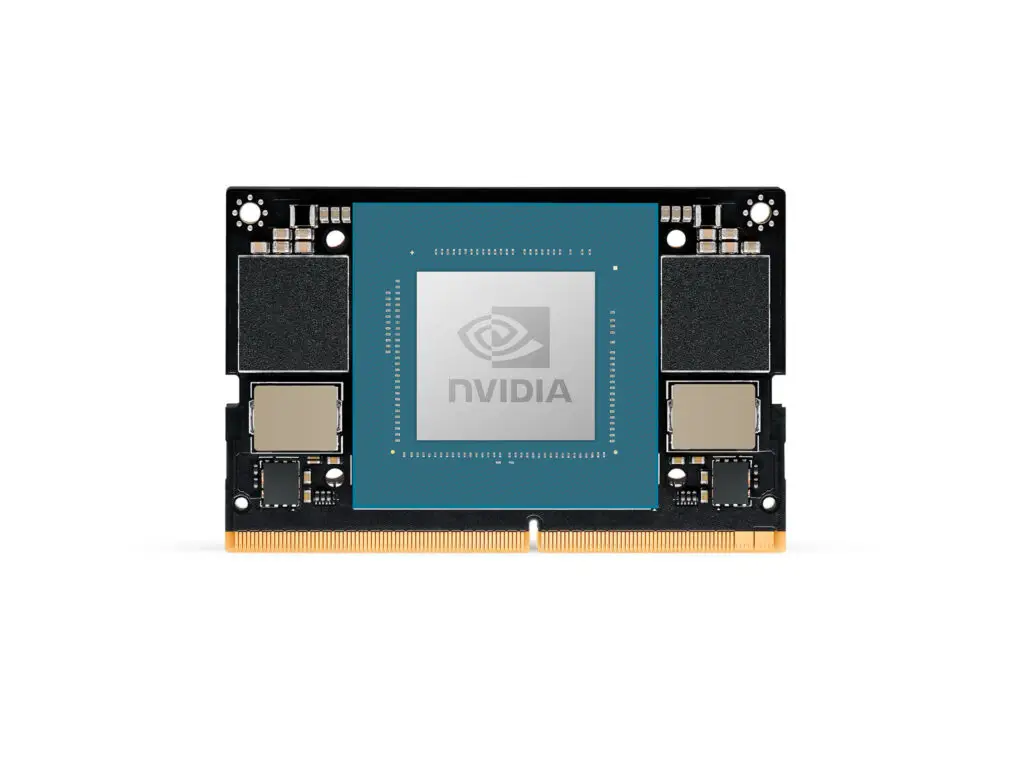

The NVIDIA Jetson Orin Nano series is the latest in NVIDIA’s line of compact, yet powerful, AI computing modules designed for edge devices. Building on the legacy of the Jetson Nano, the Orin Nano series is tailored for developers, hobbyists, and small-scale commercial applications, offering an entry point into AI, machine learning, and robotics with a focus on affordability and performance. Here’s an in-depth look at what the Jetson Orin Nano series brings to the table:

Features

- Compact Design:

- The Jetson Orin Nano retains the compact form factor of its predecessors, making it ideal for small projects or embedded systems where space is at a premium.

- AI Performance:

- Powered by the NVIDIA Ampere architecture GPU, the Orin Nano includes 512 CUDA cores, 16 Tensor cores, and 4GB or 8GB of LPDDR5 memory, providing significant AI compute capabilities in a small package.

- Memory and Bandwidth:

- Offers up to 68.2 GB/s of memory bandwidth, ensuring fast data processing for AI tasks.

- Versatile I/O:

- Equipped with interfaces like USB 3.2, Gigabit Ethernet, MIPI DSI, and MIPI CSI, allowing for connectivity with a variety of sensors, cameras, and peripherals.

- Power Efficiency:

- Can operate at different power levels, from 5W to 15W, making it suitable for battery-operated devices or power-constrained environments.

- Real-Time Capabilities:

- Although not as advanced as higher-end Jetson modules, it still includes some real-time processing capabilities for simple control tasks.

- Security:

- Includes basic security features like secure boot to ensure system integrity.

Applications

- Educational Projects:

- Perfect for teaching AI, machine learning, and robotics due to its affordability and ease of use.

- Hobbyist Projects:

- Suitable for DIY projects involving AI, from home automation to small-scale robotics.

- IoT Devices:

- Can power smart home devices, IoT sensors, and other edge AI applications where performance and cost-effectiveness are key.

- Entry-Level Robotics:

- Ideal for robotics enthusiasts or small robotics projects requiring basic AI capabilities.

Development Ecosystem

- JetPack SDK:

- NVIDIA provides JetPack, an SDK that includes the necessary tools for developing, deploying, and optimizing AI applications for the Jetson platform.

- AI Framework Support:

- Supports popular frameworks like TensorFlow, PyTorch, and others, allowing developers to leverage pre-trained models.

- Community and Support:

- Access to a supportive community, extensive documentation, and NVIDIA’s developer forums.

- Ease of Development:

- Designed with beginners in mind, offering a lower entry barrier for AI development.

Challenges and Considerations

- Performance Limitations:

- While impressive for its size, the Orin Nano has performance limitations compared to higher-end Jetson modules, suitable for less demanding AI tasks.

- Memory:

- The base model comes with 4GB of memory, which might limit the complexity of AI models that can be run without optimization.

- Development Complexity:

- Although designed to be user-friendly, there’s still a learning curve for those new to AI or embedded systems development.

- Cost:

- While more affordable than other Jetson modules, it still represents an investment for hobbyists or educational settings.

Conclusion

The NVIDIA Jetson Orin Nano series democratizes AI computing, bringing high-performance AI capabilities to a broader audience, including students, hobbyists, and small businesses. Its compact size, power efficiency, and support for AI frameworks make it an excellent platform for learning, prototyping, and developing AI-driven projects at the edge. The Jetson Orin Nano series opens up new possibilities for those looking to explore AI without the need for extensive computing resources, making AI more accessible than ever. Whether for educational purposes, hobbyist projects, or entry-level commercial applications, the Orin Nano is a stepping stone to the future of intelligent systems.